In recent years, serverless technology has become a popular approach for developing and deploying applications. With its promise of scalability, cost-efficiency, and reduced operational overhead, engineers are increasingly adopting serverless architectures. However, like any technology, serverless comes with its own set of challenges and considerations. In this comprehensive guide, we will explore the various aspects of serverless technology and provide engineers with a deeper understanding of its benefits and limitations.

Evaluating the Effectiveness of Serverless Technology

When considering serverless technology for your applications, it is essential to evaluate its effectiveness in meeting your specific requirements. One crucial aspect to consider is how serverless affects the balance between cost and performance. While serverless architectures can potentially offer significant cost savings compared to traditional server-based approaches, it is crucial to assess the trade-offs in terms of performance.

In the serverless environment, applications are divided into smaller functions that run in response to events. Each function is executed in an isolated container, which introduces a delay known as a cold start. Overcoming cold start challenges is vital to ensuring optimal performance in serverless computing. By understanding the factors that contribute to cold starts and implementing strategies to mitigate them, engineers can minimize latency and deliver a more responsive user experience.

Another key aspect to consider is the efficiency of Lambda functions, which are the building blocks of serverless applications. Lambda functions are designed to execute a specific task and can be developed in different programming languages. Maximizing the efficiency of Lambda functions requires careful consideration of factors such as memory allocation, function duration, and the size of deployment packages. By optimizing these aspects, engineers can achieve faster execution times and reduce unnecessary resource utilization.

Furthermore, determining the optimal latency for your serverless workloads is crucial for delivering a seamless user experience. Latency requirements can vary depending on the nature of your applications. For example, real-time applications, such as chatbots or multiplayer games, require extremely low latency to ensure real-time interactions. On the other hand, batch processing tasks may have more lenient latency constraints. The ability to analyze and tune the latency of your serverless workloads can greatly enhance the performance and responsiveness of your applications.

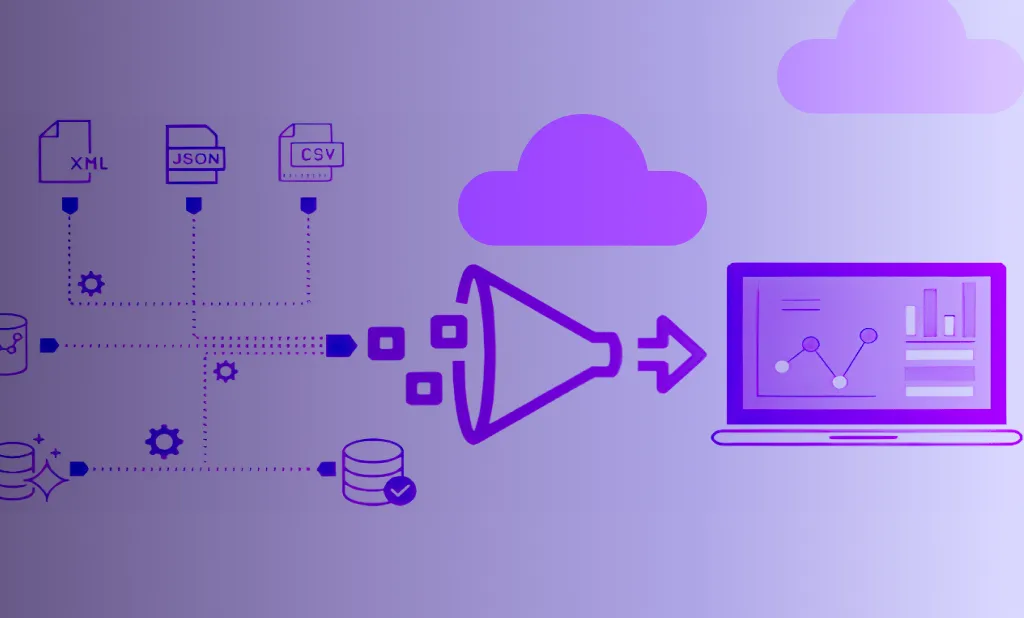

Scalability is one of the key advantages of serverless architecture. By leveraging cloud resources, serverless applications can dynamically scale based on the demand, ensuring that you only pay for the resources you actually use. Achieving scalability and efficiency with serverless architecture requires a deep understanding of the underlying cloud platform's auto-scaling capabilities and configuring your applications accordingly. By optimizing the scalability of your serverless applications, you can achieve cost savings and ensure high availability, even during peak load periods.

Seamless integration with cloud services is another aspect that engineers should consider when utilizing serverless architecture. By leveraging the rich ecosystem of cloud services, engineers can enhance the functionality of their serverless applications. Whether it's leveraging the power of machine learning through AIaaS (AI-as-a-Service) platforms or leveraging managed databases for persistent storage, the ability to seamlessly integrate cloud services can significantly enhance the capabilities of serverless applications.

When evaluating the effectiveness of serverless technology, it is important to consider the security implications. Serverless architectures introduce a shared responsibility model, where the cloud provider is responsible for securing the underlying infrastructure, while the application owner is responsible for securing the code and data. Understanding the security considerations and implementing best practices, such as encryption and access control, can ensure the confidentiality and integrity of your serverless applications.

Additionally, monitoring and observability are critical aspects of evaluating the effectiveness of serverless technology. With serverless architectures, it is essential to have robust monitoring and logging mechanisms in place to gain insights into the performance and behavior of your applications. By leveraging cloud-native monitoring tools and implementing proper logging practices, engineers can proactively identify and address any issues, ensuring the smooth operation of their serverless applications.

Real-world applications can greatly benefit from serverless solutions across various domains. From handling image and video processing to managing large-scale data analytics, serverless technology offers a highly flexible and cost-effective approach.

We strive for a balanced approach that considers both code speed and development efficiency. While serverless technology can offer rapid prototyping and development cycles, it is crucial to strike the right balance between speed and maintainability. By following industry best practices such as modular design, proper error handling, and adopting agile development methodologies, you can experience the full potential of serverless technology without compromising on code quality and long-term maintainability.

Unveiling the Unspoken Challenges of Serverless Technology

While serverless technology offers numerous benefits, it is not without its challenges. As engineers delve deeper into serverless, they will encounter unspoken challenges that may affect their application's performance and reliability. Let's explore some of these challenges and how engineers can address them.

One of the critical challenges engineers may encounter is the need for comprehensive analysis. Serverless technology is relatively new compared to traditional server-based architectures, and the knowledge and expertise required to leverage its full potential are still evolving. Engineers must go beyond the existing use cases and explore new possibilities. This requires an in-depth understanding of the underlying cloud platform, its limitations, and potential workarounds.

Another significant challenge in the realm of serverless technology is the issue of vendor lock-in. While serverless platforms offer convenience and scalability, they also tie developers to a specific cloud provider's ecosystem. This can limit flexibility and hinder portability between different platforms. Engineers need to carefully consider the long-term implications of choosing a particular serverless provider and strategize ways to mitigate the risks of vendor lock-in.

Furthermore, as serverless architectures rely heavily on third-party services and APIs, engineers must be vigilant about monitoring and managing dependencies. A minor change or disruption in a third-party service can have cascading effects on the entire application. It is crucial to implement robust monitoring and error-handling mechanisms to ensure the resilience of serverless applications in the face of external service failures.

Where to go from here?

If you're inspired by the potential of serverless technology and ready to take your projects to the next level, Wednesday is here to guide you. With our expertise, we can help you navigate the complexities of serverless architecture. Learn more about Wednesday's Services and let's transform your serverless aspirations into reality.

Enjoyed the article? Join the ranks of elite C Execs who are already benefiting from LeadReads. Join here.

Want to see how wednesday can help you grow?